Engineering

Practical patterns for building autonomous AI systems. How we architect, deploy, and operate AI agents at scale.

Practical patterns for building autonomous AI systems. How we architect, deploy, and operate AI agents at scale.

Single-agent tools dominate the market. But enterprise customers want teams. Analysis of architectural patterns, coordination strategies, and when to use each approach.

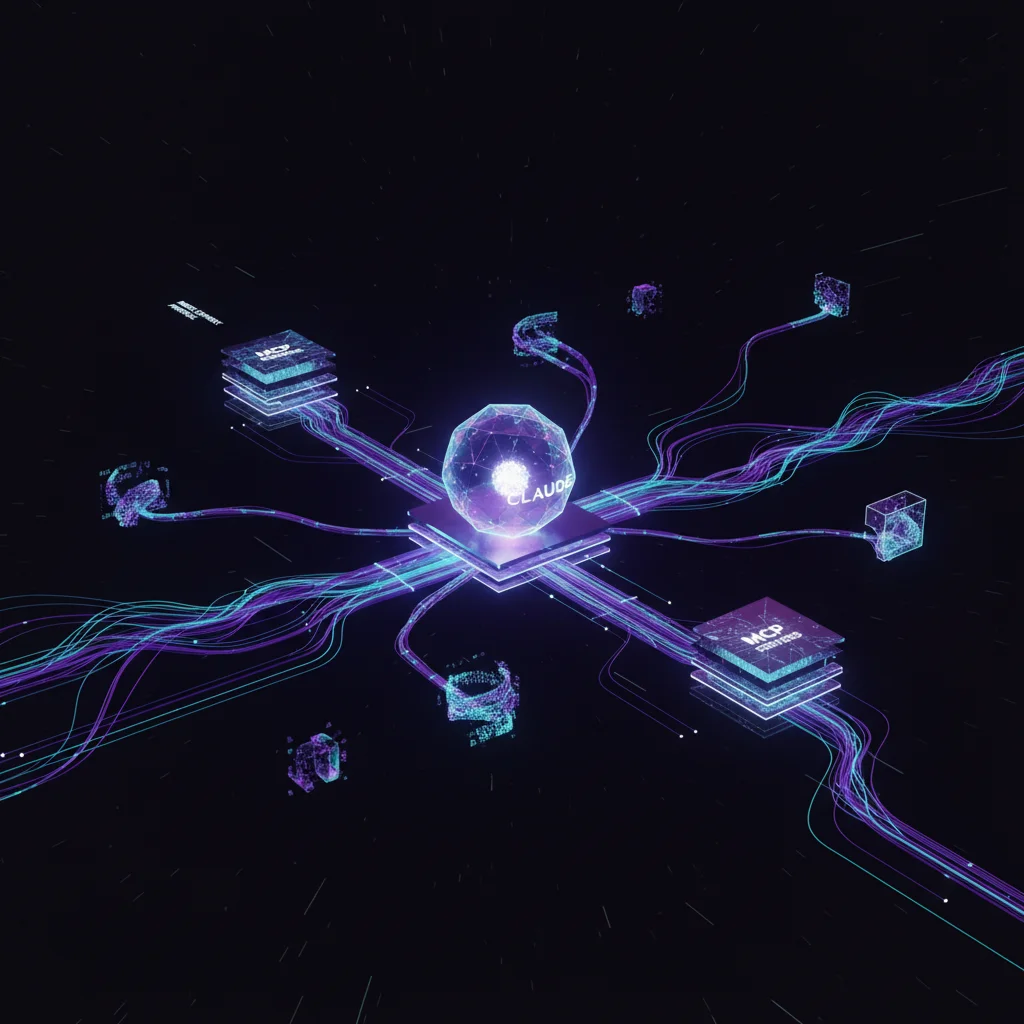

Why we moved from MCP servers to direct CLI tools. Transparent, auditable, context-efficient agent integrations you can actually understand.

When multiple agents work on the same repo, branches collide. Git worktrees let each agent work in isolation. Here's how we use them for parallel execution.

Claude's context window is finite. Bloated context means slow, expensive, and degraded performance. Here's how we keep agents lean and effective.

We've evolved our approach. CLI tools are more transparent, auditable, and context-efficient than MCP servers. See our new CLI-first architecture.

AI agents forget everything between sessions. Here's how we built a memory system that lets agents build on previous work, learn from feedback, and maintain context across weeks of operation.

How we structure AI agents into specialized squads—domain-aligned teams that own outputs, maintain memory, and execute autonomously. A practical architecture for multi-agent systems.